Large language models (LLMs) are a type of artificial intelligence (AI). LLMs are actively trained to read text and understand speech as data. Their models help them understand context-rich information and create text (or speech) that sounds like it comes from a human being.

How do LLMs work?

Large language models learn what the data they read means. Then, LLMs use deep learning and data analysis abilities to form answers to questions, offer translations, and write new content based on what they've learned. Large language models are both open-source (like LLaMa and BLOOM) and proprietary LLMs (like Claude and GPT). They operate on four interconnected, symbiotic layers that help them recognize words and make meaning. Each layer plays a specific role in processing the data input and generating the output.

Here's an example: A customer asks Google Gemini, "Help me find an UrgentCare that's open right now near me."

The embedding layer examines the raw meaning of the individual words and sorts them into vectors or numerical types of data sets. Ex. "urgent" and "open" might be sorted as different data sets.

The feedforward layer examines the individual words from all angles, accounting for the complexities of their meaning. Ex. "urgentcare" might be understood as "non-life threatening."

The attention mechanism understands the contextual relationships between the words. It can make judgments about which words are most important. Ex. It might weigh "open now" higher than "near me."

The recurrent layer remembers and uses past inputs to process the information and present responses. Ex. "Here's a map showing the nearest non-emergency medical care centers open right now near you. If you have a life-threatening emergency, dial 911."

What is RAG and how do LLMs use it?

RAG, or Retrieval-Augmented Generation, bridges the gap between what an LLM knows and what it can discover. LLMs have foundational knowledge based on training data, and with RAG, LLMs can learn from new data sources in real time. This ability to retrieve new (or "augmented") information helps LLMs generate the most accurate, up-to-date, and reliable information available.

Because LLMs train on static (or what may become obsolete) data, they might not have the latest information at hand. RAG allows LLMs to retrieve and deliver the most accurate, up-to-date responses. RAG can also cite its sources, lending transparency and building trust.

How LLMs impact search and brand visibility

As customers use AI search experiences more and more, customer trust in AI search is increasing. Customers value the direct, conversational answers that LLMs offer (instead of the traditional SERPs filled with keyword-driven links that customers have to sift through to find answers).

Brands and digital marketers need to think less about individual keywords and more about becoming the go-to resource for your topic area.

Optimizing for traditional SEO vs. optimizing for discoverability in LLMs

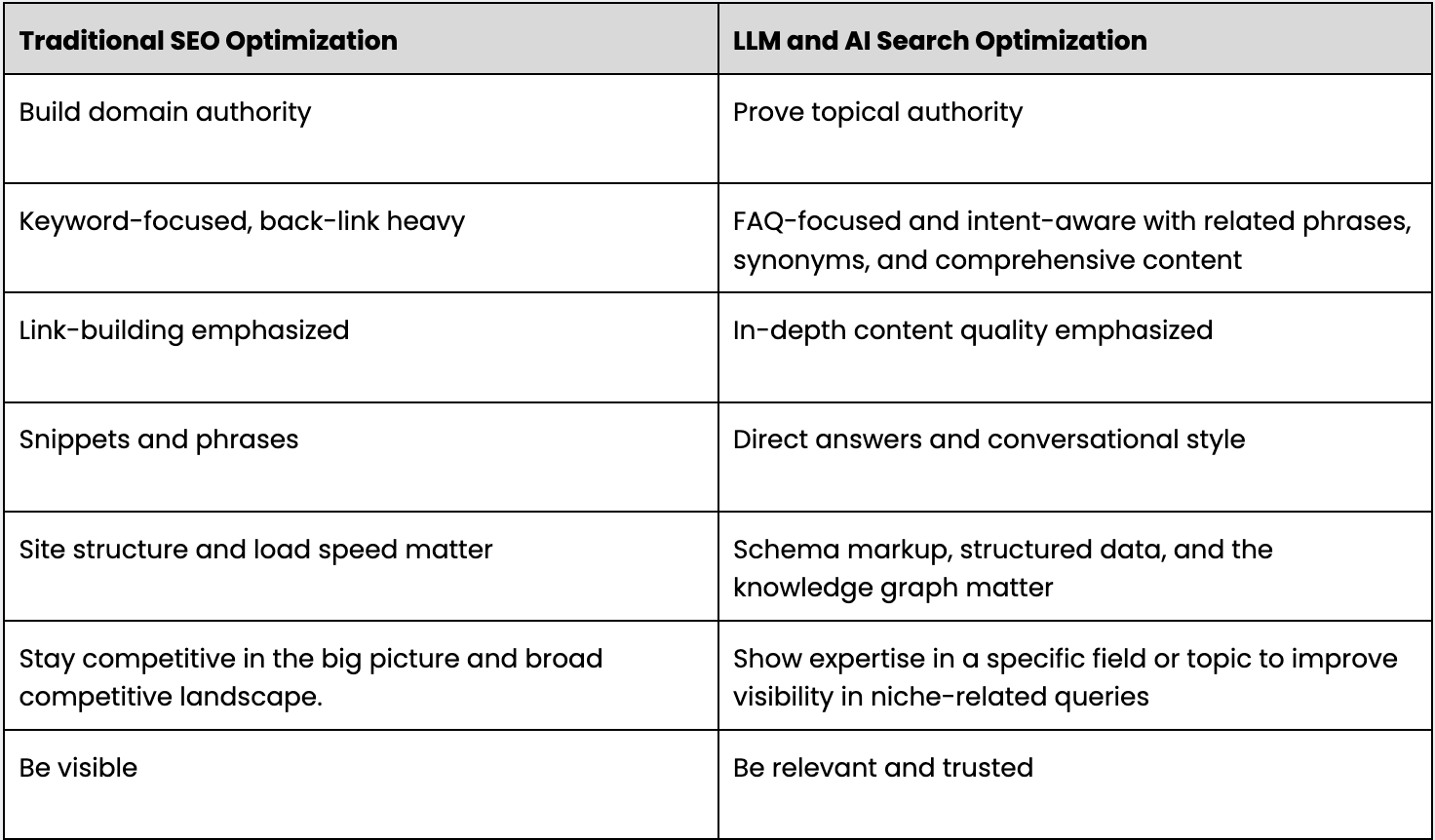

Traditional SEO was built around establishing domain authority (the overall power and trustworthiness of your website as a whole). Modern SEO has shifted to include establishing topical authority (being recognized as an expert in a specific subject area because of your larger visibility strategy.

To optimize for LLMs and stay visible in AI search, there are new rules. Your visibility strategies have to evolve from the old SEO playbook. LLMs don't care how many backlinks you have. They care about how good, useful, and accessible your content is.

Here's a comparison chart to help your brand succeed in optimizing for both traditional engines and LLM or AI-led search experiences:

As generative search results increase and improve, SERP and ranking signals are shifting

With LLMs and AI-driven search experiences, the goal isn't to game SEO or AI platforms. Instead, the goal is to give every AI platform all of the structured knowledge it needs to recognize search intent and then reply to customers with the information they need to continue on their customer journey.

That's why brands now need to align their brand, digital marketing, and content strategies with their data strategy.

Preparing your content and data strategy for LLMs and AI search

Here are six tips to adapt your content and strategy for LLMs and AI-driven search:

Audit your content. Assess how well your existing content answers common customer questions that show up in all your digital channels.

Structure content around FAQs. Use the data from your audit to directly answer the questions coming from customers along their journey.

Optimize for voice search. Create content based on what you learn about how users phrase queries verbally. Notice the types of questions customers string together.

Create comprehensive guides (and distribute content in bite-sized formats, too). Develop in-depth, authoritative content that covers topics exhaustively.

Continue to focus on E-E-A-T. Keep emphasizing your Expertise, Experience, Authoritativeness, and Trustworthiness in your content. That isn't going away.

Launch a knowledge graph with structured data. A knowledge graph forms the base for centralizing and sharing your brand information across all digital channels. The knowledge graph keeps your data organized, accurate, and interconnected. In turn, brands use the knowledge graph to improve content consistency, increase visibility, and build trust across every touchpoint.