AI Citations, User Locations, & Query Context

Location-Level Analysis of 6.8 Million AI Citations Reveals Why Geographic Context Should Be the Foundation of Brand Visibility Strategy

Christian Ward, Anthony Rinaldi, Adam Abernathy, and Alan Ai, 2025

Oct 9, 2025

Introduction

This study examines 6.8 million source citations from 1.6 million responses generated by the three major AI models. It highlights the importance of the user's intent, location, and memory (context) conditions as key frameworks for understanding AI search visibility.

Most current research on AI citations is based on a brand-level perspective that ignores the user's location or context. This brand-focused approach explains why sources like Wikipedia or Reddit often appear as a top citation source. While this method works well for establishing a brand voice, it doesn't accurately reflect typical citation patterns when individual users engage with the AI.

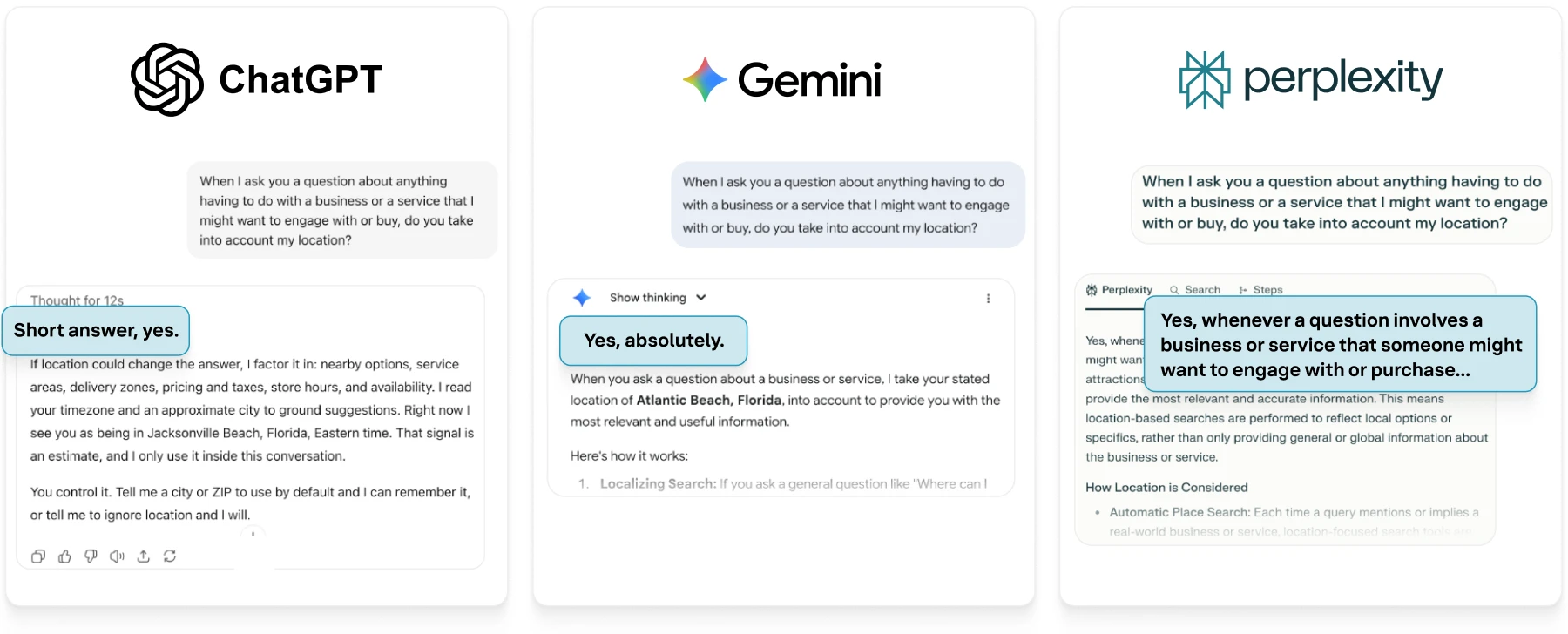

Once the user's location and context are considered, as is usually the case for most business-related questions, the citation patterns shift significantly. This location-first approach is a stated function of the AI models themselves. When asked directly, models from Google, OpenAI, and Perplexity confirm they use a consumer's location when responding to questions about businesses and services.

To quantify these implications, we tested location-focused queries across four major industries. Using a systematic four-quadrant framework (branded/unbranded and objective/subjective), the findings show material differences in how AI platforms source and cite information. The variations by industry, query type, and consumer context establish location-level analysis as the new foundation for AI visibility strategy.

This research presents a clear and strategic path for brands to strengthen their local visibility in AI.

Key Findings

Location-level analysis shows citation and source patterns that are undetectable with current brand-level studies. A retail chain might report a 47% first-party citation rate nationally, but location analysis could reveal rates of 70% in rural markets and 20% in competitive urban markets, where aggregators are more prevalent. These geographic variations make national metrics less helpful for a local visibility strategy.

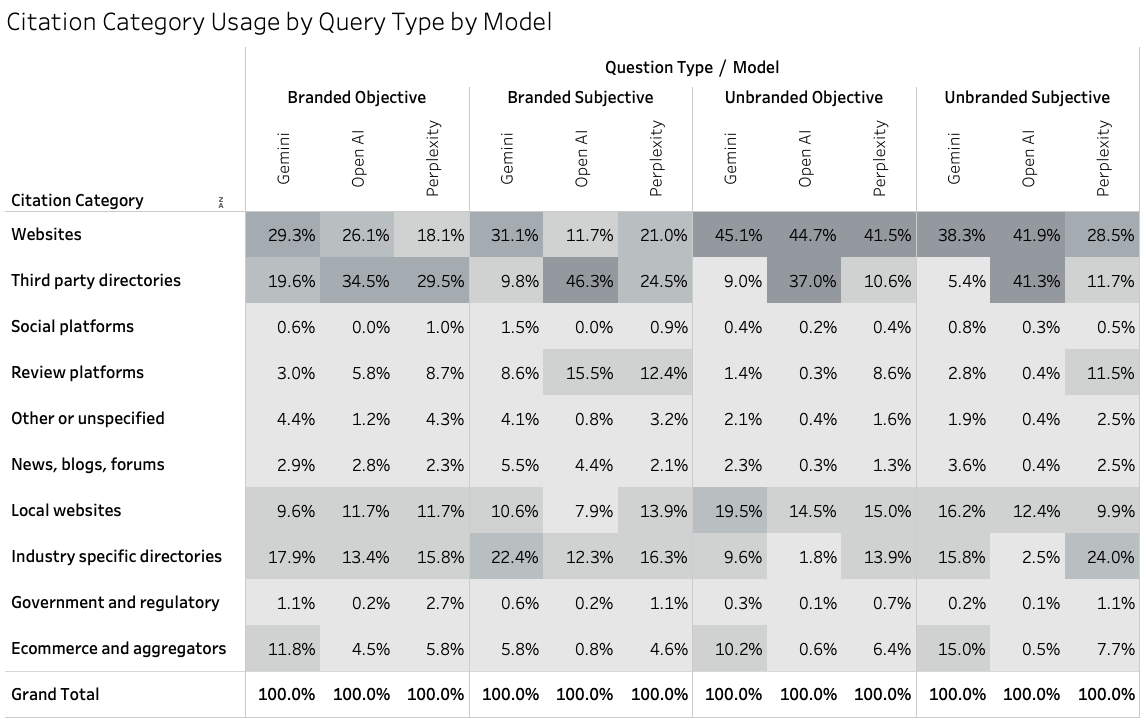

- The Power of First-Party Content for Factual Queries. When consumers ask objective, unbranded questions (e.g., "What [category] is near me?"). Websites are the dominant citation source across all three models, accounting for over 40% of citations for Gemini, OpenAI, and Perplexity. This is the single largest opportunity for brands to control their narrative by providing clear, factual information on their own web properties.

- OpenAI's Heavy Reliance on Directories for Subjective Queries. When a query becomes subjective (e.g., "What is the best...?"), OpenAI's citation behavior shifts dramatically. For both branded and unbranded subjective queries, third-party directories become a primary source, peaking at 46.3% for Branded Subjective questions. This means that for opinion-based questions on OpenAI, a brand's presence in directories is paramount.

- The Focus on Industry-Specific Directories for Perplexity. Perplexity consistently shows a strong preference for industry-specific directories, especially for subjective queries where expertise is a factor. For Unbranded Subjective queries, these specialized directories account for 24% of its citations, the highest of any model. This indicates that for Perplexity, optimizing for niche, industry-relevant platforms is a key strategic lever.

- The Untapped Potential of Local Websites. Across nearly all models and query types, local websites consistently represent a significant citation source, ranging from 8% to nearly 20% in some cases (Unbranded Objective on Gemini). This highlights the strategic importance of maintaining distinct, content-rich pages for each business location.

AI search from the consumer's point of view

Viewing AI citations from a generalized, brand-level perspective, as most current visibility research does, leaves the aspects of geo-spatial search untouched. A true brand-level analysis needs to account for realistic user behaviors by aggregating granular, location-based AI queries in context, a process that reveals a far more nuanced source pattern.

Visibility in AI is not monolithic; citation behavior changes based on the consumer's location, the context of their questions, the industry, the AI model, and the individual citation sources themselves.

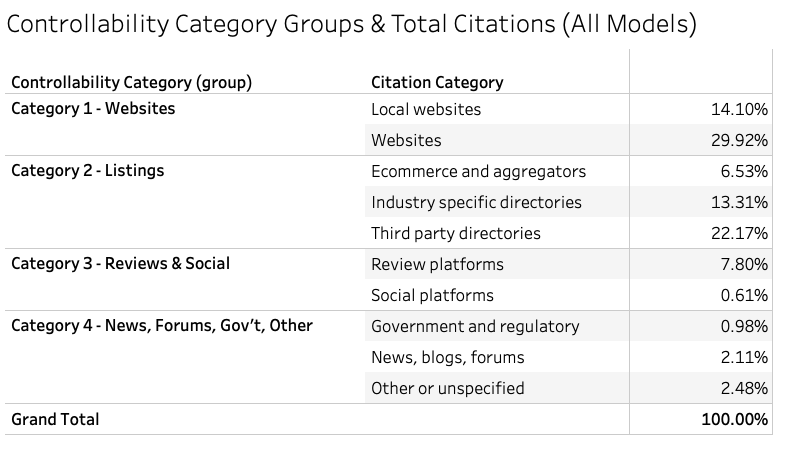

The relationship between a brand's level of control, the effort required, and the resulting AI citation usage offers a powerful framework for prioritizing actions.

The Location-Context Framework

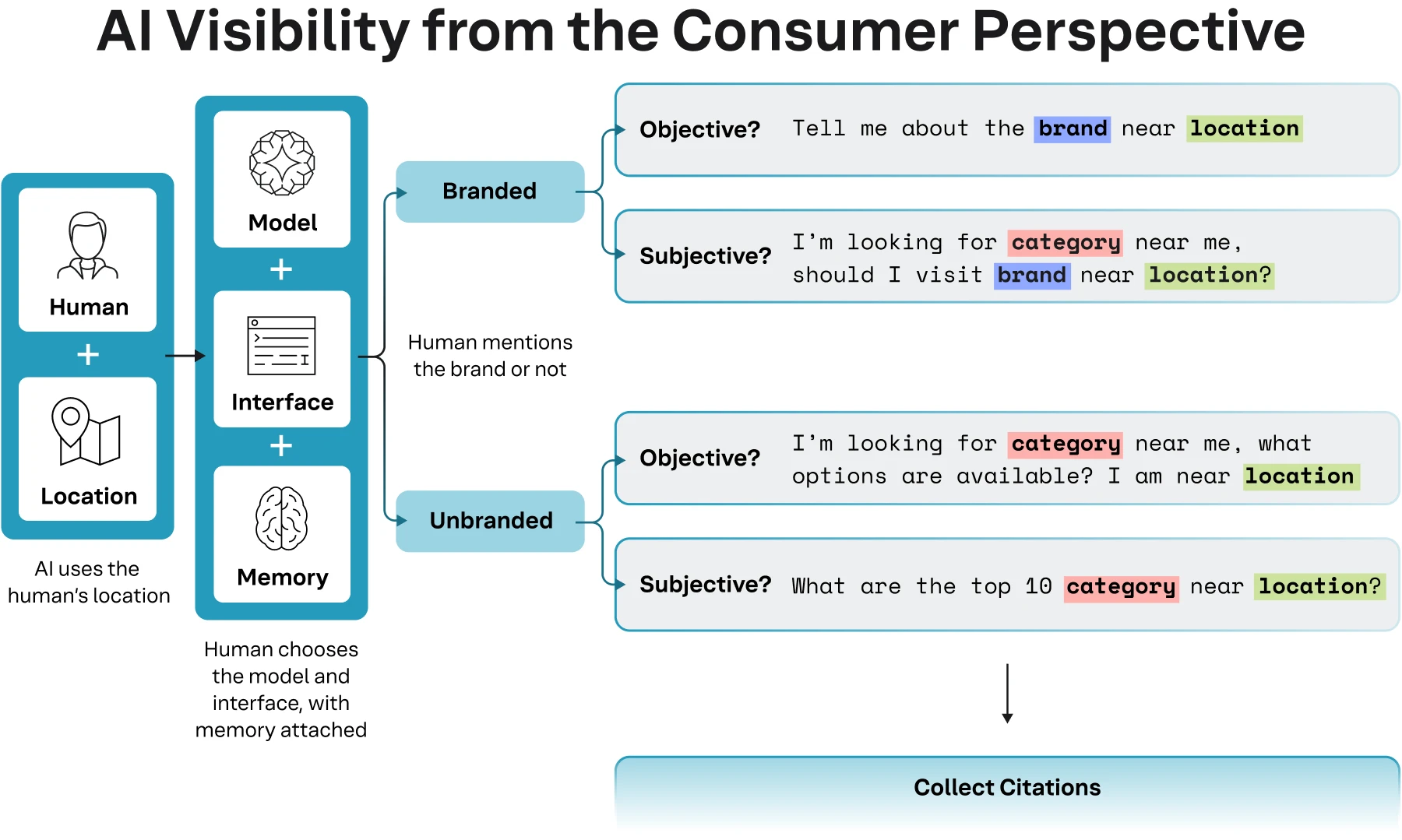

AI visibility must be understood through a consumer lens. To systematically study AI behaviors from a consumer's point of view, we've developed the Location-Context Framework, a model that begins with a consumer's location and then factors in question types to understand citation patterns. This contrasts with current research based on brand-level analysis, which may use a "query fan-out" approach where the AI generates subsequent questions. While useful for brand commentary, that method is why general sources like Wikipedia or forums often appear. This causes the AI to provide commentary rather than answers to specific consumer needs.

Figure 1: Instead of a generated fan-out, our Location-Context Framework follows a clear and predictable path.

Figure 1: Instead of a generated fan-out, our Location-Context Framework follows a clear and predictable path.

The Location-Context Framework defines a clear and predictable path that simulates the average consumer's behavior when engaging with an AI model on various search-related topics.

- A human has a need and is located in a specific location. The AI uses this geographic context as the foundational filter, similar to classic search (Google).

- The consumer chooses a model (like Gemini or ChatGPT) and an interface. The consumer also brings their conversational memory to the session, which can influence subsequent responses.

- The consumer intrinsically frames their query along two dimensions: branded vs. unbranded and objective vs. subjective.

Our results show a clear tradeoff. As a brand's control over a source increases, so does the potential for it to be used as a citation in AI responses. However, this often requires greater effort. The strategic opportunity lies in finding the right balance. While "Full Control" sources, such as first-party websites, offer the highest efficacy, "Controllable" listings on third-party sites represent a critical sweet spot, delivering a high volume of citations with a moderate level of management effort. Our proposed framework allows a brand to move beyond a reactive approach and proactively focus its resources on the activities that will have the greatest impact on its AI visibility.

Table 1

Table 1

While many brand-level studies indicate that forums like Reddit are a dominant citation source, applying a location-based consumer perspective reveals that these sources appear far less frequently, accounting for just over ~2% (154,000) overall citations. This does not diminish their importance for brand commentary, but it shows that for location-specific consumer queries, their role changes.

Defining AI citation sources by level of brand control

It is necessary to categorize citation sources based on a brand's capacity to control the information they contain. The Control Framework sorts citation sources into four distinct categories, ranging from sources that a brand fully owns to those where it has no direct influence. This strategic classification enables a brand to prioritize its efforts by focusing resources where they can have the most impact.

Figure 2

Figure 2

Category 1: Full Control (Websites) includes a brand's own first-party digital properties. Brands have complete and direct control over all content. This category is the most cited in the study, with over 2.9 million citations coming from corporate domains (e.g., wendys.com), local pages (e.g., locations.bankofamerica.com), and other brand-owned web assets.

Category 2: Controllable (Listings) encompasses a wide range of third-party directories and platforms where a brand can claim and manage its profile. While the brand does not own the platform, it can directly control the accuracy of its information. This category accounts for over 2.9 million citations and includes industry-spanning platforms, Google Business Profile, and Mapquest, as well as industry-specific directories like TripAdvisor (Food/Hospitality) or Zocdoc (Healthcare).

Category 3: Influenced (Reviews & Social) covers platforms where content is primarily user-generated, but brands can actively participate. This category is driven by reputation and accounts for over 545,000 citations from sources like Google Reviews, Yelp, and Facebook.

Category 4: Uncontrolled (News, Forums, Other) includes all sources where a brand has no direct control. An interesting finding from this research is the relatively low citation count for this category.

The most powerful finding from this data is an optimistic one: brands can directly influence or manage the sources that account for approximately 86% of all consumer-facing citations. This high degree of control is revealed only by shifting from a brand-level to a location-level perspective.

Table 2

Table 2

By understanding the citation patterns at individual consumer locations, a brand can aggregate that data to build a precise, actionable strategy for improving its visibility in AI. Without this granular understanding, it is nearly impossible to build a clear path to reaching the consumer where they are.

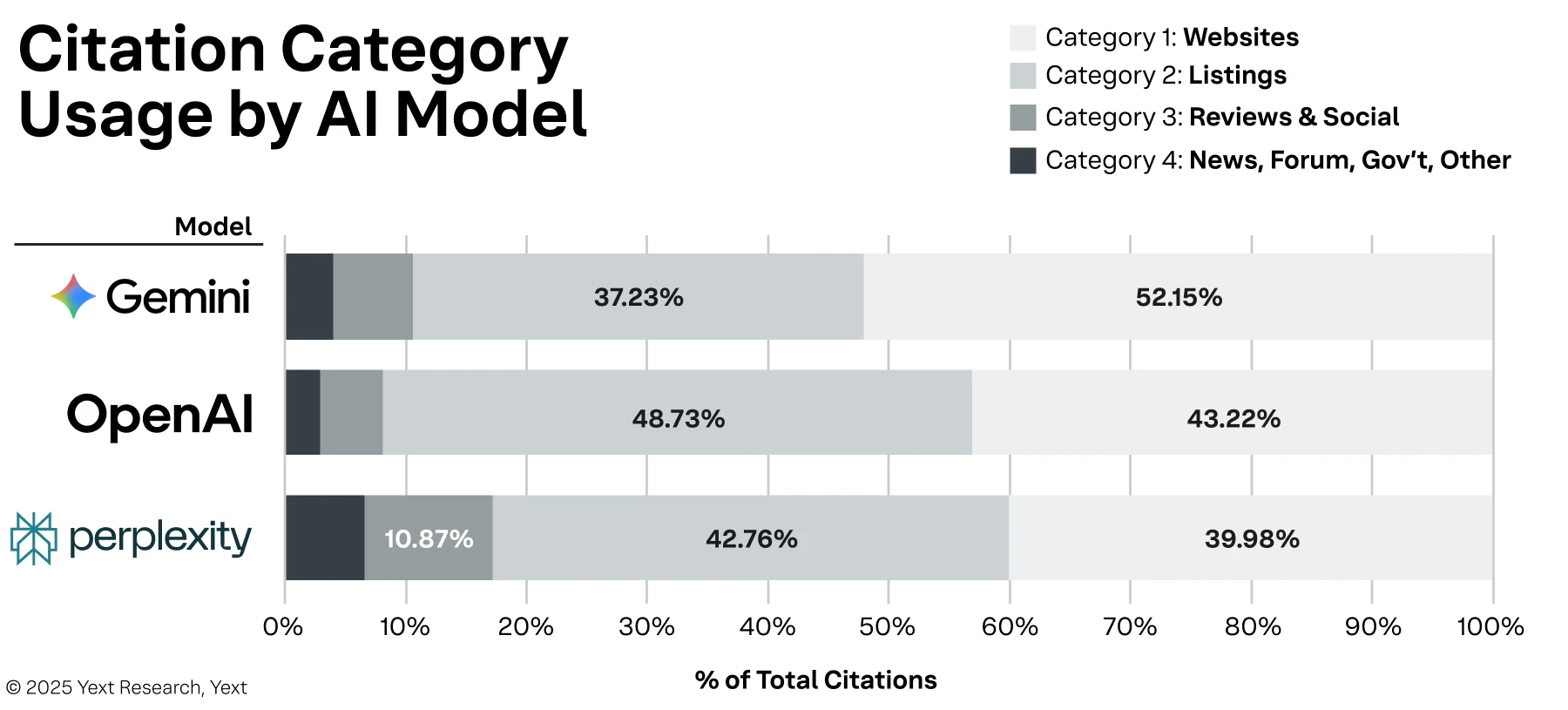

Model differences

Citation behavior is not uniform across AI models; in fact, it varies significantly. We find that a brand's visibility strategy must account for the distinct sourcing preferences of each platform.

Figure 3

Figure 3

There are lots of differences in the sources for each model. Gemini shows a strong preference for first-party websites, drawing 52.15% of its citations from these fully controlled sources. In contrast, OpenAI relies heavily on listings, with 48.73% of its citations coming from these controllable third-party platforms. These preferences become even more pronounced when examining individual domains. OpenAI's reliance on listings is overwhelmingly concentrated on Google, which accounted for over 465,000 citations. Perplexity, on the other hand, diversifies its sources, favoring MapQuest for listings (over 364,000 citations) and TripAdvisor for reviews (over 239,000 citations).

It is also important to note what is not counted as a citation. Gemini, for example, utilizes Google Business Profile information in its responses but does not cite it as a source because it is proprietary data.

These behavioral nuances mean that a brand's presence in one model does not guarantee its visibility in another, a topic that will be explored in subsequent analysis.

Differences by industry

Similar to our earlier findings when looking at Google Business Profiles, AI citations by vertical reveal distinct patterns of behavior.

We would be naive to assume homogeneous behavior across industries. We quickly found a unique blend of citation sources for each industry. The mix of brand-owned sites (Category 1) and third-party sources (Categories 3-4) ultimately determines the extent of a company's control over its narrative in AI-generated results.

(Note: In-depth reports for Finance, Food Service, Healthcare, and Retail will be released separately.)

Figure 4

Figure 4

Noticeable differences by industry vertical:

- Financial Services and Retail show the greatest potential for first-party control, with websites (Category 1) accounting for 48.16% and 47.62% of their citations, respectively. The regulated nature of finance and the e-commerce infrastructure of retail drive AI models to authoritative, brand-owned sources.

- Healthcare exhibits the heaviest reliance on third-party validation, with listings (Category 2) accounting for 52.61% of all citations. This reflects the importance of medical directories, licensing databases, and insurance platforms in establishing trust.

- Food Service presents a unique challenge, with a significant dependency on both listings (41.63%) and reviews (13.28%). The 13.28% of citations from Category 3 sources is the highest of any industry, showing that for food service brands, reputation and user-generated content are critical factors in AI visibility.

Methodology

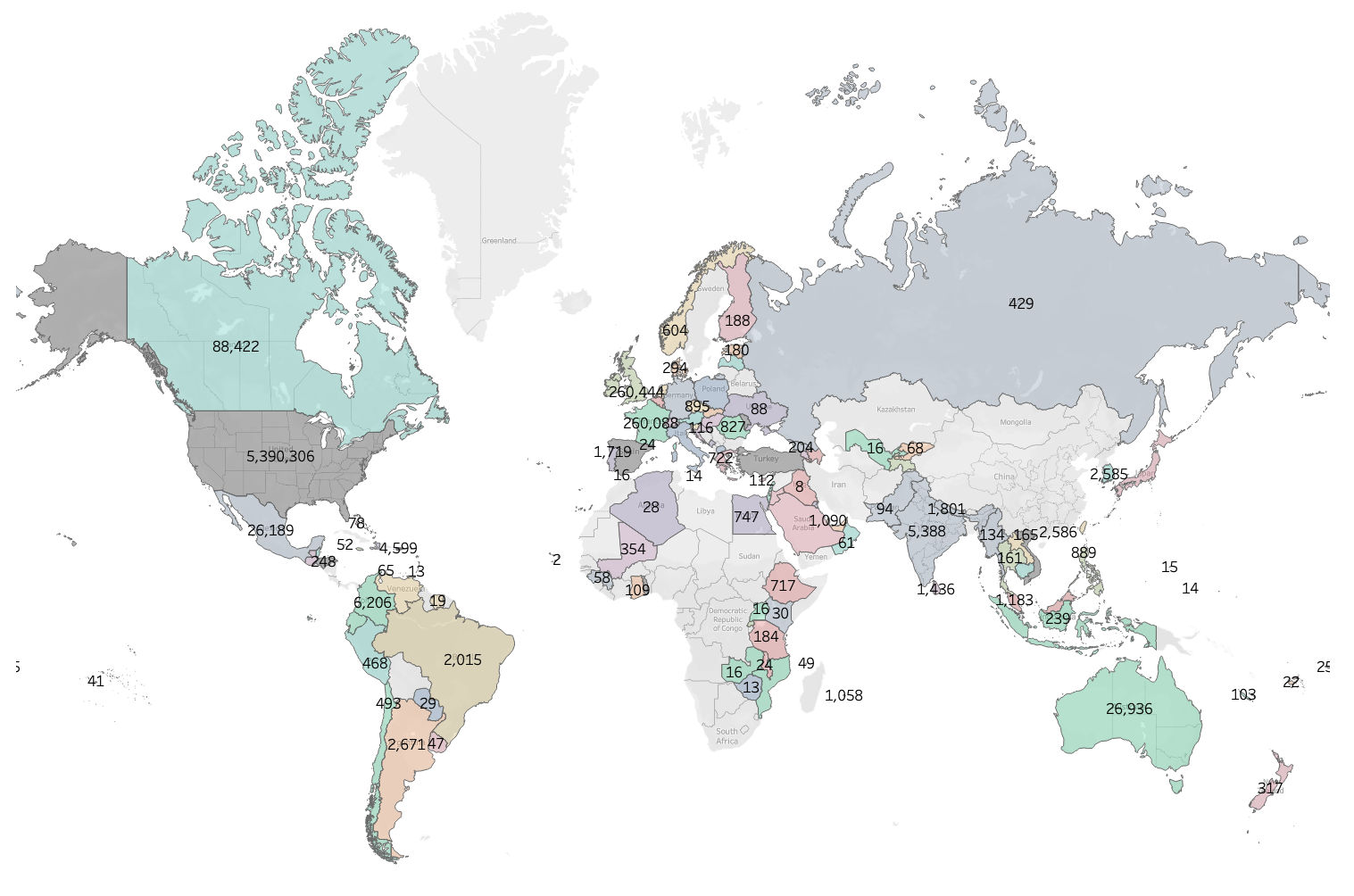

This research is based on the analysis of 6.8 million citations collected from AI search result responses between July 1, 2025, and August 31, 2025. The data was gathered from a global study of Yext clients and prospects utilizing the Yext Scout platform. Although the data originates from a specific user set, the breadth of sources cited is substantial, encompassing a long tail of 20,820 unique domains.

Using an automated system, roughly 1.6 million individual questions were asked for each of the three major AI models: Gemini, OpenAI, and Perplexity. The queries were designed to represent four distinct consumer-intent quadrants (Branded Objective, Branded Subjective, Unbranded Objective, and Unbranded Subjective) and were tested at every client and prospect location across four key industries: Finance, Food, Healthcare, and Retail. This data was gathered using the individual APIs provided by each model.

Figure 5: This study collected 6.8 million citations globally.

Figure 5: This study collected 6.8 million citations globally.

Geo-location's impact on AI search

Models from Google, OpenAI, and Perplexity confirm that they factor a consumer's location into their responses about businesses and services. We don't believe this is fundamental to how these systems prioritize relevance. Just as a traditional search engine defaults to showing nearby restaurants when you type "pizza," today's AI models use geolocation signals to anchor their answers in physical space. Whether the input comes from an IP address, device settings, or a user's explicit prompt, location appears to be a weighting factor in the model's calculus of what constitutes a useful response.

The effect is subtle but significant. Two people asking the same question in different locations may receive different answers, shaped by local business data, service coverage, and even regional language use. That means "search" in the AI era is no longer a purely abstract query-resolution process. AI-powered search is inextricably tied to the user's location and the local knowledge graph surrounding them.

Figure 6: All three major AI models account for location as part of their RAG process.

Figure 6: All three major AI models account for location as part of their RAG process.

Classifying queries by intent

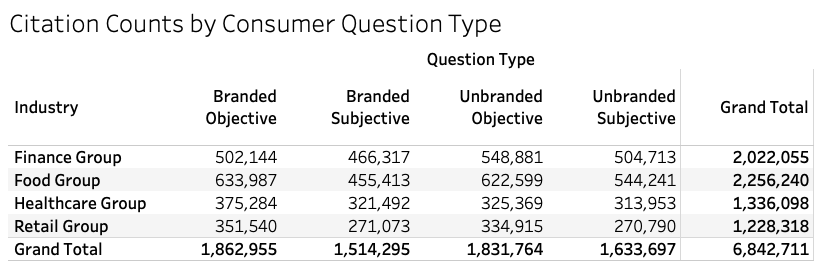

We introduce a structured approach to determining query types in relation to underlying intent. The Query Classification Framework (QCF) features four distinct query quadrants that reflect true consumer behavior and yield different citation patterns. Objective queries seek factual information, like "[Hamburger Restaurant] near me." Subjective queries seek opinions, like "What are the top 10 [Hamburger Restaurants] near [Location]?" This framework allows for a more precise analysis of how AI models respond to real-world consumer needs.

Figure 7

Figure 7

As shown, the citations tracked across query types are mostly evenly distributed, although there are larger industry variations. The design of this framework allows the analysis to move beyond an aggregate view. By tracking the results of individual question types on a model-by-model basis, this research can precisely identify what citation sources each AI model prefers, given a consumer's location, their specific query, and the industry context.

Applying the QCF to our query/citation set, we arrive at a mostly even distribution. There are some noticeable differences between the industries when it comes to "objective" questions.

Table 3

Table 3

Limitations and Future Research

This analysis is based on data from Yext clients and prospects, encompassing over 200,000 locations where they operate or may operate. The Yext Scout platform directly imports all available citations from the APIs of the individual AI models. It is important to acknowledge the differences between citations returned via an API and those presented in a direct user application. The inherent volatility of large language models means that an individual's conversational context, specific model usage, and conversational memory can all affect responses. Consequently, substantial variation can occur on a user-by-user basis. However, by analyzing thousands of contextual prompts for brands and businesses, we think the resulting citation source patterns are likely indicative of general usage patterns within a particular location.

Areas for Additional Research

The findings of this report open several avenues for more granular research. Future analysis will include:

- Model-Specific Deep Dives: A detailed breakdown of citation patterns for each individual AI model, expanding on the high-level differences identified in this report to be released in subsequent sub-reports.

- Industry Sub-Reports: Analysis of the specific domains and citation sources that appear most frequently within the individual industry reports for Finance, Food, Healthcare, and Retail.

- Geographic Variation Analysis: A quantitative analysis of how citation patterns differ between geographic market types, such as dense urban centers and more rural locations, to build on the initial observations in this study.

- Longitudinal Tracking: Ongoing monitoring of citation sources to understand how patterns evolve as the underlying AI models are updated and retrained over time.

Conclusion

The findings of this research establish a new perspective on AI visibility. A generalized, brand-level analysis is no longer sufficient, as it fails to capture the source patterns that emerge from real-world consumer queries. True understanding must be built from the ground up, aggregating granular, location-based data that reflects the specific context of a consumer's needs.

This location-first approach reveals a logical path forward. While current brand-level analysis often highlights a wide array of uncontrolled sources, this study shows that for specific consumer queries, AI models rely on a more structured set of information. Brands can directly control or influence the vast majority of these sources. The most effective strategy is the deliberate management of a brand's first-party websites, third-party listings, and online reputation.

This study, based on two months of data from prospects and customers on the Yext Scout platform, is only the beginning.

As AI models evolve, this method for location-level analysis provides a durable, customer-first approach to assessing brand performance in AI search.